DeepLabCut Model Zoo

Here we provide model weights that are already trained on a wide range of animals & scenarios.

You can use these models for video analysis (inference) without the need to train new models yourself. Or, if you need to fine-tune the model, you can use 10X less data the the original DeepLabCut weights 🔥.

NEWS: Read our first preprint about the Model Zoo:

SuperAnimal pretrained pose estimation models for behavioral analysis. Shaokai Ye, Anastasiia Filippova, Jessy Lauer, Maxime Vidal, Steffen Schneider, Tian Qiu, Alexander Mathis, Mackenzie Weygandt Mathis

Model Zoo SuperAnimals 🔥

We present a new framework for using DeepLabCut across species and scenarios, from the lab to the wild. We are alpha releasing these models to allow users to use DeepLabCut in two common scenarios with zero-training required. These models are trained in the TensorFlow 2.x framework (and PyTorch weights are downloadable and will soon be fully integrated into DLC3.0).

SuperAnimal-Quadruped: this has been trained on over 40K images of animals that have 4 legs; from mice, rats, horses, dogs, cats, to elephants and gazelles. It has 39 key points.

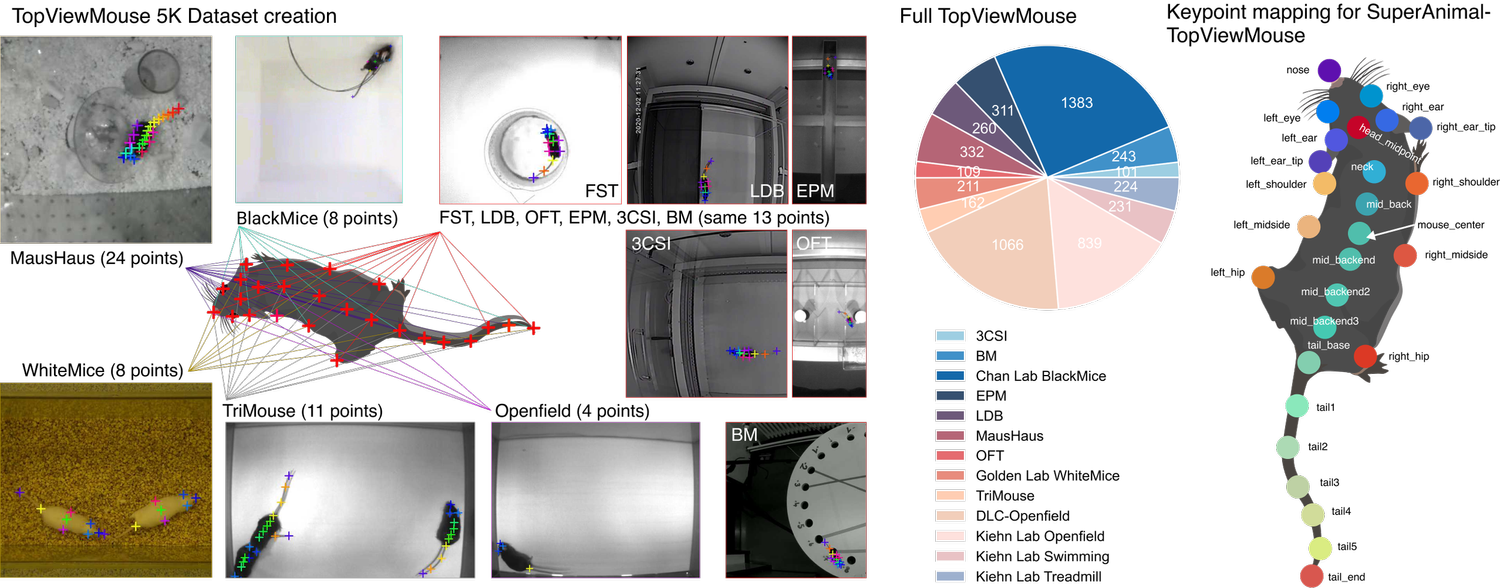

SuperAnimal-TopViewMouse: this has been trained on over 5K mice from videos across diverse lab settings; there is known a C57 bias, but some CD1 (white) mice are also included. It has 26 keypoints.

Test your videos on Google COLAB!

Simply click on the blue icon below to try it out on your videos now (and watch our tutorial if you need assistance):

Test your data on 🤗 HuggingFace!

You can take a frame from your video and test our models. Simply upload your image (we do not collect this data), and test a mode!

https://huggingface.co/spaces/DeepLabCut/MegaDetector_DeepLabCut This app was built during the 2022 DLC AI Residency Program

Hosted user-contributed models:

Below is a list of published models kindly provided in the DeepLabCut framework. All the models below are trained in the tensorflow 1.x framework (typically 1.13.1-1.15). If you use a model from the zoo in your work, please see how to cite the model below.

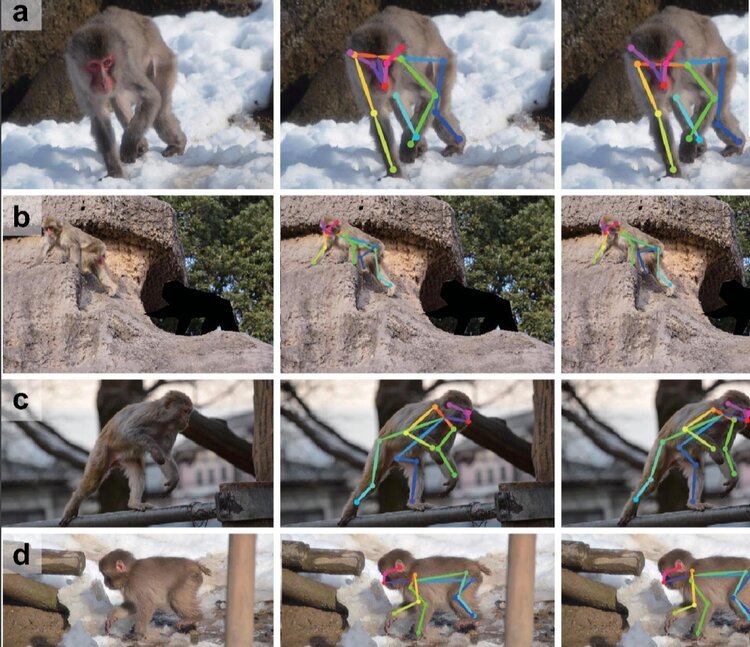

Primates:

full_macaque: From MacaquePose! Model contributed by Jumpei Matsumoto, at the Univ of Toyama. See their paper for many details here! And if you use this model, please also cite their paper.

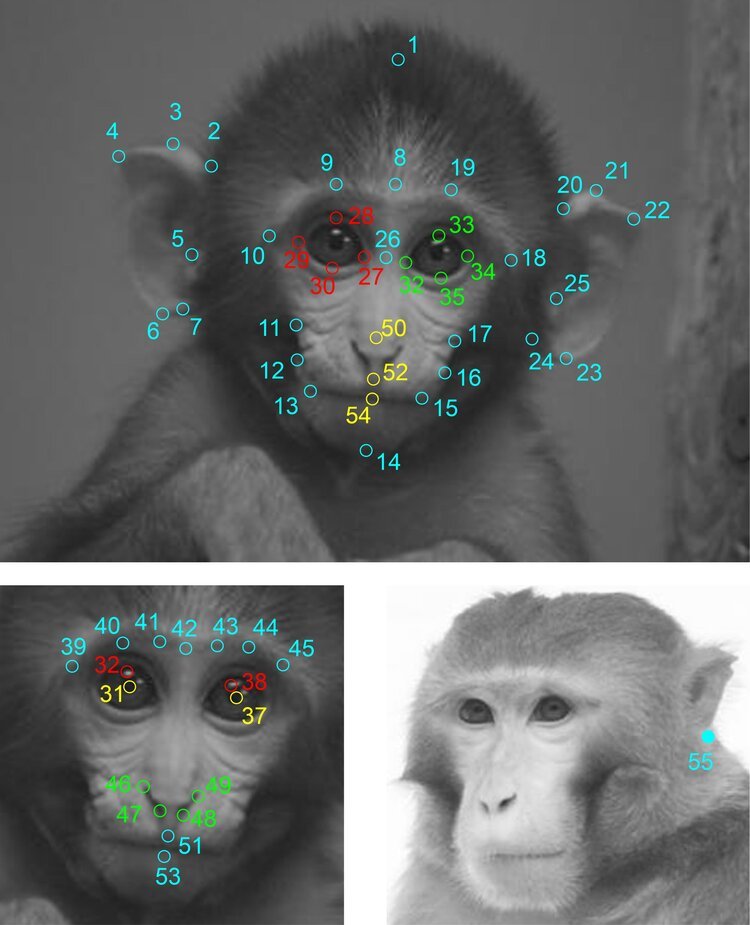

primate_face: Model contributed by Claire Witham at Centre for Macaques, MRC Harwell, UK. This model is trained on photos and videos of rhesus macaque faces – mostly forward facing or in profile. Includes range of ages from infant to adult and both sexes. Shows reasonable transference to other primates especially other macaque species. Read more here!

full_human: This model can be used to track points shown in full-body poses of humans. This is a network presented in DeeperCut, trained on the MPII-Pose dataset. It expects smaller images as input.

Other animals:

horse_sideview: A pre-trained horse network! Dataset/networks from Mathis et al. 2019 arXiv/WACV 2021. Note, this currently works on videos as shown, horses of different sizes and coat colors, but walking left to right. If you use this model, please cite our paper.

mouse_pupil_vclose: Model contributed by Jim McBurney-Lin at University of California Riverside, USA.A pre-trained mouse pupil detector. Video must be cropped around the eye (as shown)! Trained on C57/B6 mice ages 6-24 weeks (both sexes). Read more here!

full_cheetah: A pre-trained full 25 keypoint cheetah model. This is trained in TF1.15 with a DLC-ResNet-152 w/a pairwise model (see Joska et al. 2021 ICRA for details and citation!). Note, the network was trained on large GoPro videos (2704x1520), so large videos are the expected input.

Please note, that full_cat and full_dog released in March 2020 have been sunset as of Nov 2022; instead, use the SuperAnimal- Quadruped model.

Help us make this even better: more data, more models, more results!

(See who has generously contributed data already!)

In order to make this simple and fast, we launched the DeepLabCut WebApp for Labeling! The images will be used to train “super-nets” (like above).

Q’s? email us: modelzoo@deeplabcut.org.

If you want to get in touch with us about a model contribution, please see this form!

How to cite a model: If you use a model from the zoo in a publication, we ask you please cite the model paper (i.e., see model description for citation), and/or for our unpublished models (non-commercial use only), please cite Ye et al 2023!

Model Zoo Contributors!

A huge thank you to those that have contributed to the DeepLabCut Model Zoo

by labeling data and/or providing models!

Models:

Jim McBurney-Lin, University of California Riverside, USA (mouse eye)

Claire Witham, Centre for Macaques, MRC Harwell, UK (primate face)

Rollyn Labuguen, Jumpei Matsumoto, University of Toyama, Japan (full macaque)

Data:

Sam Golden, University of Washington

Jacob Dahan, Columbia University

Katherine Shaw, U Ottawa, CAN

Nick Hollon, Salk Institute for Biological Studies

Loukia Katsouri, Sainsbury Wellcome Centre, UCL

Lauren (Nikki) Beloate, St. Jude Children's Research Hospital

Leandro Aluisio Scholz, Queensland Brain Institute, The University of Queensland

Diego Giraldo, Johns Hopkins University

Alice E Kane, Harvard Medical School

Nina Harano, Columbia University (Columbia College)

Vasyl Mykytiuk, Max Planck Institute for Metabolism Research

Khaterah Kohneshin, University of Pittsburgh

Michelle Joyce, Temple University

Alejandra Del Castillo, Georgia Institute of Technology

Tara O'Driscoll, UCL

Alice Tang, Columbia University